Understanding the Power of Advanced Data Integration

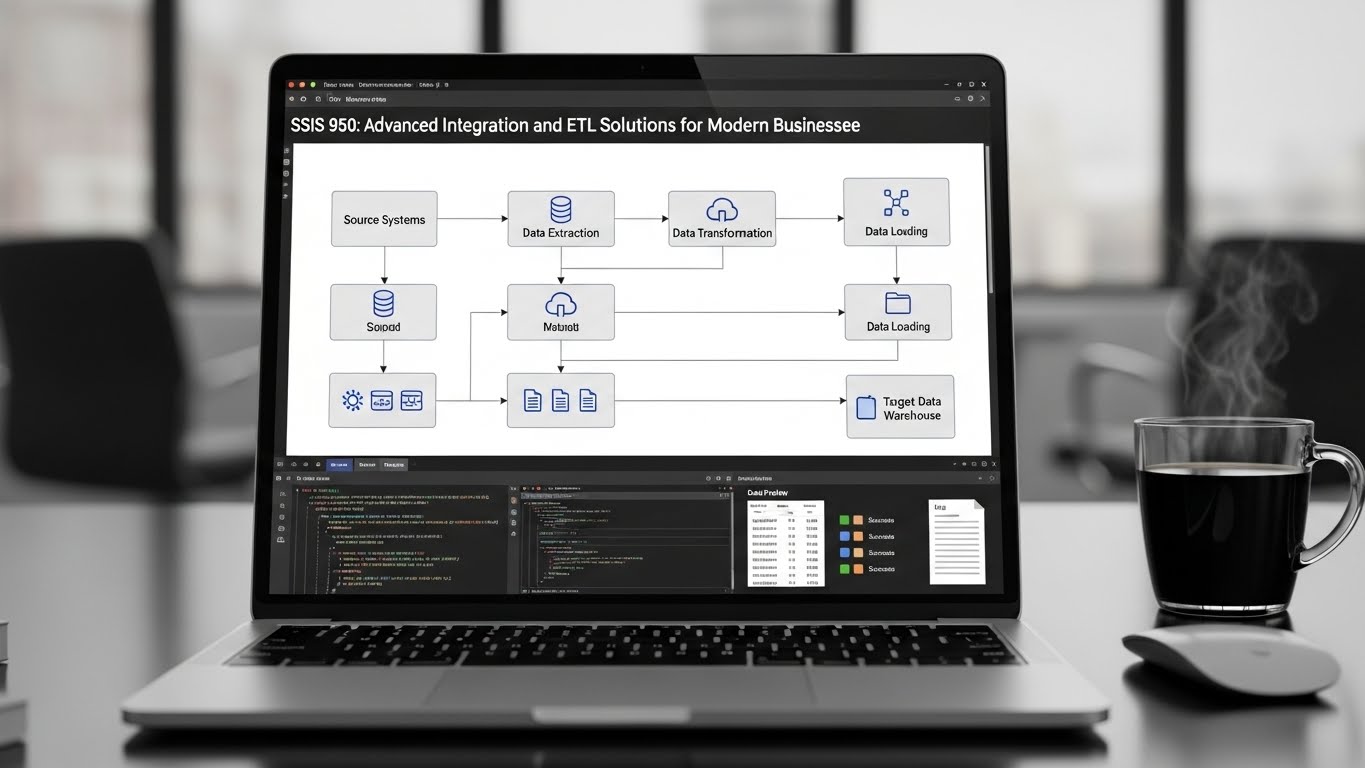

SSIS 950 represents a significant advancement in data integration technology that helps businesses manage complex information workflows. Furthermore, this powerful platform enables organizations to extract, transform, and load data across multiple systems efficiently. Companies increasingly rely on sophisticated tools to handle growing data volumes and maintain competitive advantages in markets. Moreover, the solution addresses critical challenges that enterprises face when consolidating information from diverse sources reliably. Organizations can streamline operations and make better decisions when they implement robust integration frameworks for data.

Core Capabilities and Technical Features

Robust Data Extraction Mechanisms

The platform excels at pulling information from various sources including databases, flat files, and cloud applications. Additionally, it supports numerous connection protocols that allow seamless integration with legacy systems and modern platforms. Users can configure extraction processes to run on schedules or trigger based on specific events automatically. Consequently, businesses maintain up-to-date information repositories without manual intervention or constant monitoring from technical teams. This automation reduces human error and ensures consistent data collection across all organizational touchpoints continuously.

Advanced Transformation Capabilities

Transformation features allow users to cleanse, standardize, and enrich data before loading it into destination systems. Therefore, organizations can apply business rules, perform calculations, and merge information from multiple sources during processing. The solution provides built-in functions for common transformation tasks while supporting custom scripts for specialized requirements. This flexibility ensures that businesses can handle unique data scenarios that standard tools might not address. Clean and properly formatted information leads to more accurate analytics and better strategic decision-making overall.

Efficient Loading and Destination Management

Loading mechanisms optimize performance when transferring large data volumes to target databases and data warehouses. Subsequently, the platform employs intelligent batching and parallel processing to minimize transfer times and system resource consumption. Users can configure error handling procedures that manage exceptions without disrupting entire data pipeline operations completely. This reliability proves essential for mission-critical processes that organizations depend on for daily business functions. Fast and accurate data delivery enables real-time reporting and timely insights that drive competitive advantages.

Benefits for Enterprise Organizations

Enhanced Operational Efficiency

Organizations experience dramatic improvements in operational efficiency when they automate previously manual data integration processes effectively. Meanwhile, IT teams can redirect resources from routine maintenance tasks to strategic initiatives that drive innovation. The platform reduces the time required to make information available across departments from days to hours. Employees access current data for analysis, reporting, and decision-making without delays caused by outdated integration methods. This efficiency translates directly into cost savings and improved productivity across entire organizational structures consistently.

Improved Data Quality and Consistency

The solution enforces data quality standards through validation rules and error detection mechanisms built into transformation processes. Furthermore, organizations can standardize formats, eliminate duplicates, and correct inconsistencies before data reaches analytical systems. Consistent and accurate information increases trust in reports and analytics that stakeholders use for critical decisions. Data governance becomes easier when a centralized platform manages all integration workflows and maintains audit trails. High-quality information assets become competitive differentiators that separate successful organizations from struggling competitors in markets.

Scalability for Growing Businesses

The architecture supports horizontal and vertical scaling to accommodate increasing data volumes as businesses expand operations. Additionally, organizations can add new data sources and destinations without redesigning entire integration frameworks from scratch. Performance optimization features ensure that the platform maintains speed and reliability even with exponentially growing workloads. This scalability protects technology investments and prevents costly system replacements as companies evolve and mature. Growing enterprises need solutions that adapt to changing requirements rather than constraining business possibilities unnecessarily.

Implementation Best Practices

Planning Your Integration Strategy

Successful implementations begin with thorough planning that identifies all data sources, transformation requirements, and destination systems. Initially, organizations should map current data flows and document pain points in existing integration processes completely. Stakeholders from IT, business units, and analytics teams must collaborate to define requirements and success metrics. Clear planning prevents scope creep and ensures that the implementation delivers measurable value to the organization. Well-defined objectives guide technical decisions and help teams prioritize features during configuration and deployment phases.

Designing Efficient Workflows

Workflow design significantly impacts performance, maintainability, and reliability of integration processes over time and changing conditions. Developers should create modular packages that handle specific tasks rather than building monolithic workflows that become difficult. Reusable components reduce development time and ensure consistency across multiple integration processes throughout the organization. Proper error handling and logging mechanisms help teams quickly identify and resolve issues when they inevitably occur. Efficient design today prevents technical debt and maintenance headaches that plague poorly architected systems tomorrow.

Testing and Validation Procedures

Comprehensive testing ensures that integration workflows perform correctly under various conditions and data scenarios before production deployment. Moreover, teams should validate data accuracy by comparing source records with destination records after transformation processes. Performance testing identifies bottlenecks and optimization opportunities that might not appear during initial development with datasets. User acceptance testing confirms that business requirements translate correctly into technical implementations that deliver expected results. Thorough testing prevents costly mistakes and builds confidence in the reliability of critical data pipelines.

Performance Optimization Techniques

Memory and Resource Management

Optimizing memory usage prevents performance degradation when processing large datasets that exceed available system resources significantly. Developers can configure buffer sizes and adjust parallelism settings to balance speed with resource consumption effectively. Monitoring resource utilization during peak loads helps identify opportunities for hardware upgrades or configuration adjustments accordingly. Proper resource management ensures consistent performance and prevents system crashes that disrupt business operations and schedules. Efficient systems deliver better return on infrastructure investments and reduce operational costs over extended periods.

Indexing and Query Optimization

Strategic indexing on source and destination tables dramatically improves data extraction and loading speeds during workflow execution. Furthermore, optimized queries reduce database server load and minimize the time required to retrieve necessary information. Developers should analyze execution plans and identify slow-running queries that bottleneck overall workflow performance significantly. Database administrators and integration developers must collaborate to balance indexing benefits against maintenance overhead and storage. Well-tuned queries and indexes create responsive systems that meet service level agreements and user expectations.

Incremental Loading Strategies

Incremental loading techniques process only changed or new records rather than reprocessing entire datasets during updates. Consequently, workflows complete faster and consume fewer resources while maintaining data currency across integrated systems effectively. Organizations can implement change data capture mechanisms or timestamp-based filtering to identify records requiring processing efficiently. This approach proves particularly valuable for large historical datasets that change infrequently but require regular updates. Incremental strategies enable more frequent data refreshes without overwhelming system capacity or extending processing windows unacceptably.

Security and Compliance Considerations

Data Encryption and Protection

The platform supports encryption for data in transit and at rest to protect sensitive information throughout processes. Additionally, organizations can implement column-level encryption for personally identifiable information and other confidential data elements. Access controls ensure that only authorized personnel can view, modify, or execute integration workflows and configurations. These security measures help organizations comply with regulations like GDPR, HIPAA, and industry-specific data protection requirements. Strong security practices protect company reputation and prevent costly breaches that damage customer trust permanently.

Audit Trails and Monitoring

Comprehensive logging creates audit trails that track all data movements, transformations, and user actions within the platform. Moreover, organizations can review logs to identify unauthorized access attempts, unusual patterns, or potential security incidents. Monitoring dashboards provide real-time visibility into workflow execution status, errors, and performance metrics for oversight. Compliance officers can generate reports demonstrating adherence to data handling policies and regulatory requirements during audits. Transparent operations and detailed records prove essential when organizations must demonstrate regulatory compliance to authorities.

Troubleshooting Common Challenges

Connectivity and Authentication Issues

Connection failures often result from network configurations, firewall rules, or expired credentials that prevent system communication. Initially, administrators should verify network connectivity and confirm that authentication credentials remain valid and properly configured. Testing connections in isolation helps identify whether issues originate from source systems, the platform, or destinations. Documentation of connection settings and dependencies facilitates faster troubleshooting when problems arise unexpectedly during operations. Proactive monitoring alerts teams to connectivity issues before they impact critical business processes and reporting.

Performance Degradation Problems

Gradual performance decline often indicates growing data volumes, inefficient queries, or insufficient hardware resources for workloads. Developers should review workflow designs and identify opportunities to optimize transformations, reduce data movement, or parallelize. Historical performance metrics help teams establish baselines and detect anomalies that signal underlying problems requiring attention. Regular performance reviews prevent small issues from escalating into major problems that disrupt operations completely. Continuous improvement maintains system responsiveness as business requirements and data volumes evolve over time steadily.

Data Quality and Validation Errors

Validation errors typically occur when source data fails to meet expected formats, ranges, or business rules. Therefore, teams should implement comprehensive data profiling to understand source data characteristics and anticipate potential quality. Clear error messages and detailed logging help developers quickly identify problematic records and root causes efficiently. Collaboration with data owners helps address quality issues at the source rather than applying bandaid fixes. Preventing quality problems upstream reduces integration complexity and improves confidence in analytical outputs significantly.

Future-Proofing Your Integration Infrastructure

Embracing Cloud Integration Patterns

Modern organizations increasingly adopt hybrid architectures that combine on-premises systems with cloud applications and services. The platform supports cloud connectivity that enables seamless data exchange between traditional databases and modern applications. Organizations can gradually migrate workloads to cloud environments while maintaining integration with legacy systems during transitions. Cloud integration capabilities ensure that technology investments remain relevant as industries embrace digital transformation initiatives. Flexible architectures adapt to evolving business models without requiring complete system overhauls that disrupt operations.

Preparing for Advanced Analytics

Integration platforms lay the foundation for advanced analytics, machine learning, and artificial intelligence initiatives across organizations. Clean, consolidated data enables data scientists to build accurate models that drive automation and intelligent decision-making. Real-time integration capabilities support operational analytics that provide immediate insights into business performance and trends. Organizations that invest in robust integration infrastructure position themselves to capitalize on emerging technologies quickly. Strong data foundations separate innovative leaders from companies struggling to extract value from fragmented information.

Conclusion

This powerful integration solution transforms how organizations manage data across complex enterprise environments and diverse systems. By providing comprehensive extraction, transformation, and loading capabilities, it enables businesses to unlock information value effectively. Organizations that master these tools gain competitive advantages through faster insights, improved operations, and better decisions. Investment in proper implementation, optimization, and governance pays dividends through enhanced business agility and intelligence. Companies embracing modern integration practices position themselves for success in increasingly data-driven competitive landscapes worldwide.